Metadata Considered Harmful ... to Deduplication

| Venue | Category |

|---|---|

| HotStorage'15 | Deduplication Metadata |

Metadata Considered Harmful ... to Deduplication1. SummaryMotivation of this paperMetadata meets deduplicationImplementation and Evaluation2. Strength (Contributions of the paper)3. Weakness (Limitations of the paper)4. Future Works

1. Summary

Motivation of this paper

The effectiveness of deduplication can vary dramatically depending on the data stored.

This paper shows that many file formats suffer from a fundamental design property that is incompatible with deduplication.

intersperse metadata with data in ways that result in otherwise identical data being different.

Little work has been done to examine the impact of input data in deduplication.

Metadata meets deduplication

Main idea: separate metadata from data

- design deduplication-friendly formats

- application-level post-processing

- format-aware deduplication

- Metadata impacts deduplication

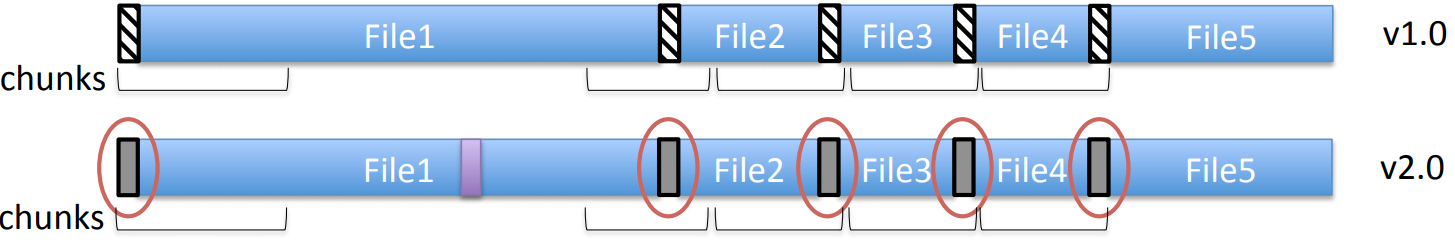

- Metadata changes: the input is an aggregate of many small user files, which is interleaved with file metadata. (including, file path, timestamps, etc.)

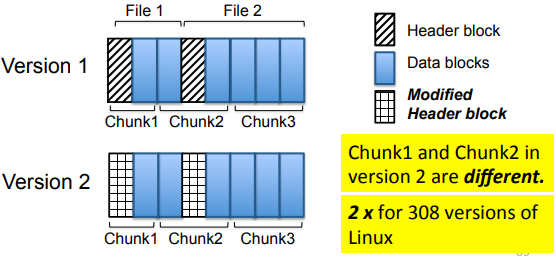

By mixing more frequently changing metadata with data blocks, the tar format unnecessarily introduces many more unique chunks.

- Metadata location changes: the input is encoded in blocks and metadata is inserted for each block, data insertion/deletion lead to metadata shifts.

- Deduplication-friendly formats

- Common data format separate data from metadata (EMC backup format)

the metadata of all files is grouped together and stored in one section.

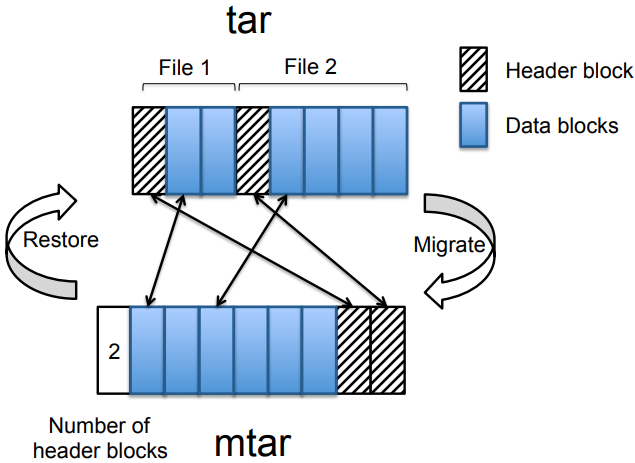

- Application-level post-processing (Migratory tar) tar is a well-defined data format

unfriendly to deduplication in wide use for decades, thus hard to change for compatibility reasons. tar file is a sequence of entries a entry: one header block + many data blocks

Migratory tar (mtar)

separate metadata from data blocks by colocating metadata blocks at the end of the mtar file.

Store the offset of the metadata block in the first block of a mtar file for efficient access

a restore operation reads the first block, find the first header block reads all data blocks for that file repeat this process for every file

Implementation and Evaluation

- Implementation:

- Modify the tar program to support convert

- use fs-hasher to support chunking for comparison

- Evaluation dataset: the linux kernel distribution

- compare the deduplication ratio with different methods

2. Strength (Contributions of the paper)

- The main contribution of this paper is it identifies and categorizes barriers to deduplication, and provides two cases study:

Industrial experience: EMC Data Domain Academic research: GNU tar

3. Weakness (Limitations of the paper)

- The idea of this paper is very simple, I only concern the restore performance of mtar

4. Future Works

- This key insight in this paper is separating metadata from data can improve the deduplication ratio significantly.

- This paper also mentions it is very necessary to design a data format to be deduplication-friendly.

the application can improve deduplication in a platform-independent manner while isolating the storage system from the data format.

- The tar format is unfriendly for deduplication, specifically, they found that metadata blocks have no deduplication, while data blocks show high deduplication ratios.